In the grand scale of Internet of Things (IoT) and Machine-to-Machine (M2M) communication, it is tempting to use HTTP and specifically HTTPS as a communication protocol between devices and servers. The most prominent reasons for choosing to use the HTTPS protocol are:

A device can use a small HTTP client library together with a small SSL stack, making it very convenient for the device computer programmers to design an HTTPS based IoT protocol that can communicate with any type of backend application/web server. Although a web server can handle HTTPS requests, it cannot typically handle the business logic required for managing the data sent to and from the connected devices, so in most cases an application server is required for the backend infrastructure.

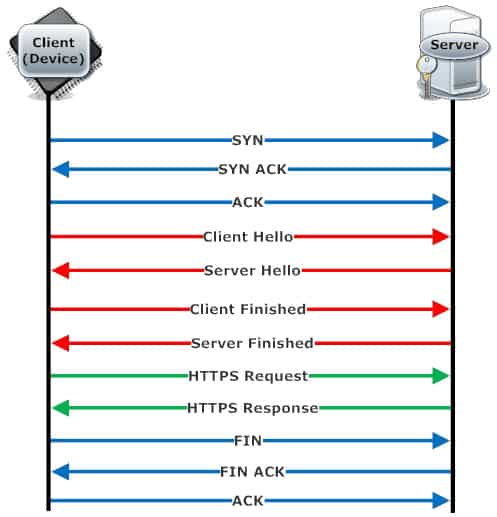

You may think that sending a simple HTTPS message from the device and receiving a simple HTTPS response from the server does not impose a lot of protocol overhead. However, what appears to be a simple HTTPS request sent from the client and simple HTTPS response from the server, is actually a long sequence of commands sent between the client and server. Let's take a look at what goes on behind the scene in a typical HTTPS client request and server response:

Figure 1 Shows the handshaking sequence for the three protocols: TCP/IP (blue), SSL(red), and HTTPS (green).

As you see from figure 1, what appears from a higher level perspective as a single HTTPS request/response is actually a set of several messages sent on the wire. This imposes a problem in HTTPS based systems that require data sent from the server to the device client since such systems require that the client polls the server for updates. The more frequent the polls, the more load placed on the server. As an example, assume we design a remote control system for opening and closing garage doors.

Figure 2 shows how the microcontroller based garage door controller continuously sends HTTPS poll requests to the server.

A simple web based phone app, connected to the online server, lets the subscriber remotely manage his/her own garage door. Say you forget to close your garage door. You open your phone app(a client), and you click the close door button. The message is immediately sent to the online server. The server finds the corresponding garage door IoT device from the connected phone app's user credentials. The server cannot immediately send the close door control message to the garage door IoT device client. Instead it saves the control command message that it received from the phone and waits for the next poll request from the garage door IoT device client. The close garage door control command will then be sent as the response message to the client poll request.

How long are you prepared to wait for the garage door to close after you press the close button? Probably not that long, and probably not longer than one minute. This means the device poll frequency must be at least in one minute intervals.

Now, say that the online server manages 250,000 garage doors, each polling the server for data every minute. The server will then need to handle 250,000 HTTPS request/response pairs every minute or 4 request/response pairs every millisecond. This will obviously create a huge network load, but the big question is if the server can handle that many connection requests. Recall that for each HTTPS request/response pair, we have at a bare minimum 9 messages sent on the wire. This means the server must be able to handle 36 low level messages every millisecond. In addition, the very heavy to compute asymmetric key encryption places a huge computation load on the server. Each HTTPS handshaking requires that the server performs asymmetric key encryption for that particular connection.

The obvious solution to the problem is to use persistent connections. A high end TCP/IP stack easily handles 250K, or 500K, or even more persistent socket connections. The question is if your web/application server solution can handle as many connections and how much memory the server uses if it supports this many connections. For this reason, selecting a good web/application server solution is very important. We will discuss this in greater detail later.

A standard TCP/IP socket connection is by definition persistent, and it can handle messages sent in both directions at the same time. Creating a custom listen server may not be part of your web/application server infrastructure/API, and a custom non HTTPS based protocol will prevent the client from penetrating firewalls and proxies. What we need is a protocol that starts out as HTTPS and then morphs into a secure persistent socket connection, keeping all the benefits of HTTPS.

The WebSocket protocol defined in RFC 6455 specifies how a standard HTTP request/response pair can be upgraded to a persistent full duplex connection. When using SSL, it lets you morph a HTTPS connection into a secure persistent socket connection. WebSocket-based applications enable real-time communication just like a regular socket connection. What makes WebSocket unique is that it inherits all of the benefits of HTTPS since it initially starts as a HTTPS request/response pair. This means that you can bypass firewalls/proxies, communicate over SSL, and easily provide authentication.

The following embedded iframe shows our online real time LED demo. Controlling LEDs (lights) via an online server requires real time features similar to remotely controlling a garage door. The browser-server communication uses the SMQ pub/sub protocol, which uses WebSockets for the transport. The server acts as a proxy for communication between the user interfaces (browsers) and devices.

Connect your own simulated device to the above online IoT server by compiling the SMQ LED Example using an online C compiler. Click the Run button and wait for the code to compile and run. You should then see one more device show up in the above user interface. Alternatively, download the C code from GitHub.

Navigate the world of embedded web servers and IoT effortlessly with our comprehensive tutorials. But if time isn't on your side or you need a deeper dive, don't fret! Our seasoned experts are just a call away, ready to assist with all your networking, security, and device management needs. Whether you're a DIY enthusiast or seeking expert support, we're here to champion your vision.

Expedite your IoT and edge computing development with the "Barracuda App Server Network Library", a compact client/server multi-protocol stack and IoT toolkit with an efficient integrated scripting engine. Includes Industrial Protocols, MQTT client, SMQ broker, WebSocket client & server, REST, AJAX, XML, and more. The Barracuda App Server is a programmable, secure, and intelligent IoT toolkit that fits a wide range of hardware options.

SharkSSL is the smallest, fastest, and best performing embedded TLS stack with optimized ciphers made by Real Time Logic. SharkSSL includes many secure IoT protocols.

SMQ lets developers quickly and inexpensively deliver world-class management functionality for their products. SMQ is an enterprise ready IoT protocol that enables easier control and management of products on a massive scale.

SharkMQTT is a super small secure MQTT client with integrated TLS stack. SharkMQTT easily fits in tiny microcontrollers.

An easy to use OPC UA stack that enables bridging of OPC-UA enabled industrial products with cloud services, IT, and HTML5 user interfaces.

Use our user programmable Edge-Controller as a tool to accelerate development of the next generation industrial edge products and to facilitate rapid IoT and IIoT development.

Learn how to use the Barracuda App Server as your On-Premises IoT Foundation.

The compact Web Server C library is included in the Barracuda App Server protocol suite but can also be used standalone.

The tiny Minnow Server enables modern web server user interfaces to be used as the graphical front end for tiny microcontrollers. Make sure to check out the reference design and the Minnow Server design guide.

Why use FTP when you can use your device as a secure network drive.

PikeHTTP is a compact and secure HTTP client C library that greatly simplifies the design of HTTP/REST style apps in C or C++.

The embedded WebSocket C library lets developers design tiny and secure IoT applications based on the WebSocket protocol.

Send alarms and other notifications from any microcontroller powered product.

The RayCrypto engine is an extremely small and fast embedded crypto library designed specifically for embedded resource-constrained devices.

Real Time Logic's SharkTrust™ service is an automatic Public Key Infrastructure (PKI) solution for products containing an Embedded Web Server.

The Modbus client enables bridging of Modbus enabled industrial products with modern IoT devices and HTML5 powered HMIs.